Loading...

Loading...

Loading...

Loading...

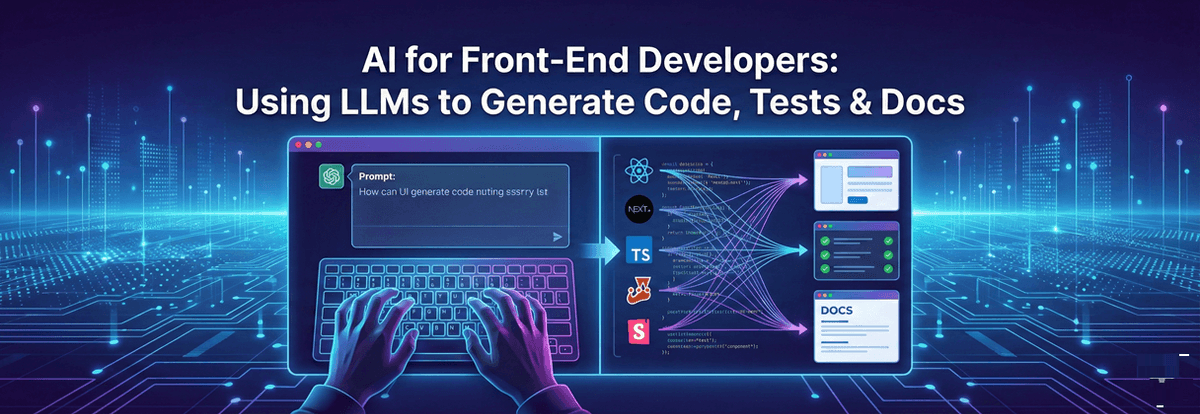

Turn AI into a force multiplier for your UI stack. Learn prompt patterns, guardrails, test-first workflows, Storybook automation, and CI integration to ship reliable, documented React and Next.js features faster.

A hands-on 2025 playbook for front-end engineers to use LLMs to scaffold React/Next.js components, write TypeScript, generate unit/e2e tests, create Storybook and MDX docs, and wire everything into CI with safety and quality controls.

Large Language Models (LLMs) are no longer just autocomplete—they’re reliable collaborators that can scaffold components, refactor TypeScript, write Jest/Testing Library specs, produce Playwright e2e flows, and even draft Storybook stories and MDX docs. Used well, they compress days of work into hours while improving consistency and coverage. Used poorly, they generate brittle code and silent regressions.

This guide shows front-end developers how to plug LLMs into a modern React/Next.js stack, with prompt patterns, guardrails, examples, and CI integration. You’ll learn how to generate code you can trust, validate it with tests, and ship discoverable, documented UI—faster.

An AI-augmented front-end loop looks like this:

Keep humans in the loop: you review architecture, naming, and edge cases; the model handles repetitive scaffolding and boilerplate.

Prompts should be deterministic, constrained, and contextual. Provide the target framework, file boundaries, and style rules.

Why it works: You bound the output to specific files, libraries, and accessibility rules, which steers the model away from hallucinated dependencies.

Ask the model to generate a minimal, typed component with exhaustive props and predictable state transitions.

Keep it headless; styling can be slots or wrapper classes. This makes the component reusable across design systems.

Once the skeleton exists, ask the LLM to write behavior-driven tests that validate navigation, boundary conditions, ARIA attributes, and callbacks.

Prompt tip: Ask for tests that fail when accessibility contracts break—this locks in a11y as a non-regression requirement.

For critical flows (checkout, login, search), generate Playwright tests from acceptance criteria. Provide route URLs, data states, and selectors.

Pin endpoints and IDs in fixtures to make tests stable; ask the LLM to refactor selectors to role-based queries for resilience.

LLMs excel at turning prop types and examples into living docs. Prompt for CSF3 stories, controls, and accessibility notes. Then add an MDX page with usage and do/don’t patterns.

Automate docs generation in CI: extract prop tables from TypeScript and regenerate MDX on changes—LLM fills narrative gaps.

For app routes, keep components server-first and pass small client islands only when interactivity is required. Ask the LLM to produce an RSC data loader and a client wrapper.

Keep Pagination headless and client if it needs event handlers; otherwise render static anchors for crawlable pagination URLs.

Automate quality checks so generated code never lands broken:

Ask the LLM to write the CI file then review tokens, permissions, and cache keys manually.

LLMs shine at mechanical refactors: converting JS to strict TS, extracting hooks, or flattening prop drilling. Provide a small example and rules (no any, explicit generics, exhaustive unions).

Run tsc --noEmit in CI to enforce correctness.

Include a11y and i18n in your prompts so generated code adopts them by default:

LLM output mirrors your inputs—seed it with your team’s standards.

For large repos, prefer spec-first generation: provide JSON/TS interfaces for props and state, then ask the LLM to implement against the spec. For safe edits, combine AI with AST tools (ts-morph, codemods) to enforce exact changes and run automated codemods in CI.

Feed this spec and require exhaustive props handling; generate tests that assert behavior across all branches.

LLMs are the new power tool for front-end teams: they scaffold components, cement behavior with tests, and create clean, searchable docs. The win isn’t just speed—it’s quality at scale when you pair AI with strict TypeScript, a11y-first patterns, Storybook, and CI guardrails.

Start small: pick one component, write the prompt with constraints, generate code + tests + docs, and wire the CI. Iterate on your prompt library, encode your design tokens, and let the model do the repetitive work while you focus on architecture, UX nuance, and edge cases.

The result: faster delivery, higher coverage, consistent documentation—and happier users.

A passionate and detail-oriented frontend developer with a strong knowledge in Web Development and strong foundation in HTML, CSS, JavaScript, React.js and Next.js.

At CDPL Ed-tech Institute, we provide expert career advice and counselling in AI, ML, Software Testing, Software Development, and more. Apply this checklist to your content strategy and elevate your skills. For personalized guidance, book a session today.